Transforming two-dimensional (2D) photos into three-dimensional (3D) models has revolutionized industries such as gaming, architecture, medicine, e-commerce, and cultural preservation. Techniques like photogrammetry, AI-driven reconstruction, and structured light scanning allow creators to digitize real-world objects or scenes with accuracy. Whether you’re a hobbyist using a smartphone or a professional building virtual worlds, this technology offers boundless possibilities. This article explores how to convert image to 3d model , methods, applications, practical tips, and emerging trends for turning presented in concise, actionable points.

Transforming two-dimensional (2D) photos into three-dimensional (3D) models has revolutionized industries such as gaming, architecture, medicine, e-commerce, and cultural preservation. Techniques like photogrammetry, AI-driven reconstruction, and structured light scanning allow creators to digitize real-world objects or scenes with accuracy. Whether you’re a hobbyist using a smartphone or a professional building virtual worlds, this technology offers boundless possibilities. This article explores how to convert image to 3d model , methods, applications, practical tips, and emerging trends for turning presented in concise, actionable points.

If you want to help in this site and properly create this work.

Core Methods for 3D Model Creation

Several techniques how to convert 2D images into 3D models, each with unique advantages and use cases:

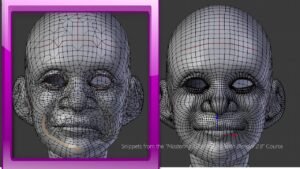

- Photogrammetry: Captures 50–200 overlapping photos from various angles. Software identifies keypoints, estimates camera positions, creates a 3D point cloud, builds a polygon mesh, and applies textures. Tools like Agisoft Metashape offer automatic masking to enhance accuracy for complex objects. Tools: Agisoft Metashape, RealityCapture, Meshroom. Example: Photograph a historical monument to create a VR-ready 3D model.

- Single-Image Reconstruction: AI predicts 3D shapes from one photo using depth estimation and neural rendering (e.g., NeRF). Tools like LUMA AI refine textures in real-time. Tools: NVIDIA Instant NeRF, LUMA AI, Polycam. Example: Turn a shoe photo into a 3D model for e-commerce displays.

- Structured Light Scanning: Projects light patterns onto objects; cameras capture distortions to calculate 3D shapes via triangulation. Artec 3D offers sub-millimeter accuracy; iPhone LiDAR makes it accessible. Tools: Artec 3D, iPhone LiDAR. Example: Scan a sculpture for an AR app.

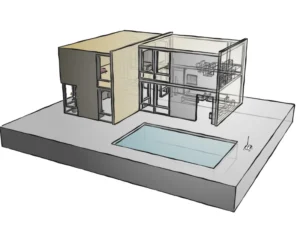

- Stereo Vision: Uses two images from different angles to compute depth and build a 3D mesh. OpenCV supports custom setups. Tools: OpenCV, StereoPi. Example: Capture a building facade for architectural visualization.

- AI Generative Models: Generative AI creates 3D models from a single image or text prompt, predicting geometry and textures. DreamFusion supports text-to-3D. Tools: DreamFusion, 3Dpresso. Example: Generate a 3D chair model for furniture design.

- Volumetric Capture: Multiple cameras capture an object simultaneously, creating high-fidelity 3D models. Popular in film studios, Microsoft’s tools bring it to consumers. Example: Record a person’s movements for a 3D avatar in VR.

If you more information or tutorial how to create 2d to 3d image go for visit in site and clear to confusion

Applications Across Industrie

how to create 3D modeling from photos offers transformative applications across various fields:

- Cultural Heritage Preservation: Digitizes artifacts or sites for virtual tours or 3D-printed replicas. CyArk preserves Machu Picchu digitally; Google Arts & Culture offers virtual museum models. Example: Create a 3D model of an ancient vase for global access.

- E-Commerce and Product Visualization: Creates 3D product models for interactive AR shopping, boosting engagement. Shopify integrates 3D models into product pages. Example: Display 3D jewelry models online.

- Gaming and Animation: Converts photos into 3D assets, saving production time. Unreal Engine integrates photogrammetry outputs. Example: Use a tree photo for a 3D forest in Unity.

- Medical Visualization: Builds 3D models from medical images for surgery or education. AI enhances organ modeling accuracy. Example: Model a bone from X-rays for orthopedics.

- DIY Projects: Enables 3D printing of custom designs from photos. Thingiverse communities share scanned models. Example: Scan a broken machine part to print a replacement.

- Augmented Reality (AR): Powers AR apps with 3D models from photos. Snapchat’s AR lenses use scanned objects. Example: Create AR filters for interior design apps.

- Education and Training: Provides 3D models for interactive learning, like virtual dissections or architectural simulations. Universities use photogrammetry for archaeology education. Example: Model an artifact for a virtual classroom.

How to Practical Considerations for Success

High-quality 3D models require attention to key factors:

- Image Quality: High-resolution, well-lit photos with 70–80% overlap (for photogrammetry) ensure accuracy. Blurry images cause flawed models. Use diffused lighting to avoid shadows.

- Processing Power: Photogrammetry and AI require robust computing. RealityCapture Cloud or NVIDIA RTX GPUs speed up processing for large datasets.

- Software Accessibility: Meshroom suits beginners; RealityCapture offers advanced features like automated alignment for professionals. Choose based on project complexity.

- Hardware Needs: Smartphones work for casual use; DSLRs or LiDAR scanners (e.g., iPhone 14 Pro) provide precision. Drones aid large-scale scanning, like buildings.

- Surface Challenges: Reflective or featureless surfaces (e.g., glass) disrupt reconstruction. Chalk powder or polarized filters improve results.

- File Optimization: Large models need decimation to reduce file size for web or mobile use. Blender offers mesh simplification tool

Getting Started with 3D Model Creation

Starting is accessible for both beginners and professionals:

- Beginner Tools: Polycam or LUMA AI offer user-friendly smartphone scanning. Ideal for small objects like mugs or toys, with built-in tutorials.

- Professional Workflows: Agisoft Metashape supports photogrammetry with batch processing. Udemy or YouTube courses teach workflows from capture to rendering.

- Experimentation: Start with textured objects (e.g., sculptures), then try complex scenes like rooms. Practice improves skills and model quality.

- Community Resources: X, Reddit, or Sketchfab host tutorials on tools like Meshroom. Search recent posts for software updates or tips.

- Testing and Validation: Use CloudCompare to verify model accuracy by comparing measurements to real objects, ensuring reliability.

Future Trends in 3D Model Creation

Emerging technologies are advancing image-to-3D conversion:

- AI Advancements: Improved NeRF or diffusion models enable faster, more accurate single-image reconstructions, reducing processing times to minutes.

- Mobile Integration: iPhone LiDAR or Apple’s A17 Pro chip enables real-time 3D scanning, making it accessible to casual users.

- Cloud Processing: Platforms like Autodesk Forge offer cloud-based reconstruction, reducing local hardware needs and enabling team collaboration.

- Real-Time Applications: 5G and edge computing support real-time 3D modeling for AR/VR, such as virtual fashion shows or live events.

Conclusion

Converting images into 3D models blends art and technology, bridging physical and digital realms. From photogrammetry’s precise models to AI’s single-image solutions, the methods are versatile and user-friendly. Applications range from preserving heritage to enhancing e-commerce and gaming. With focus on image quality, hardware, and software, anyone can create 3D models. Emerging trends like AI advancements and mobile integration promise greater accessibility. Start with apps like Polycam or professional tools like RealityCapture, and transform your 2D photos into 3D masterpieces today.